Abstract: We introduce a novel unsupervised machine learning framework that incorporates the ability of convolutional autoencoders to discover features from images that directly encode spatial information, within a Bayesian nonparametric topic model that discovers meaningful latent patterns within discrete data. By using this hybrid framework, we overcome the fundamental dependency of traditional topic models on rigidly hand-coded data representations, while simultaneously encoding spatial dependency in our topics without adding model complexity. We apply this model to the motivating application of high-level scene understanding and mission summarization for exploratory marine robots. Our experiments on a seafloor dataset collected by a marine robot show that the proposed hybrid framework outperforms current state-of-the-art approaches on the task of unsupervised seafloor terrain characterization.

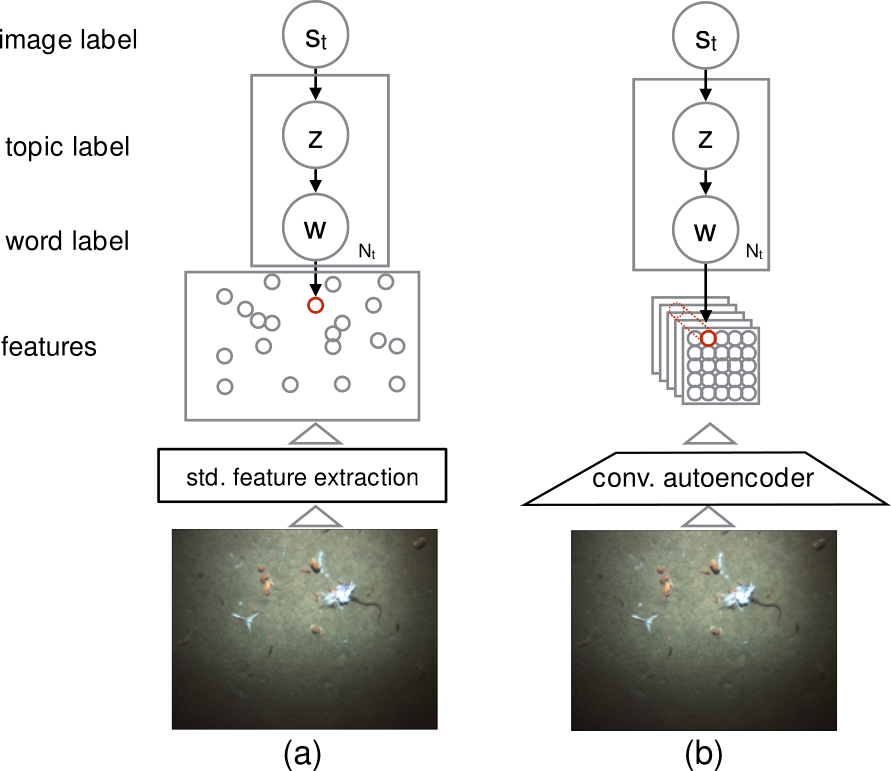

In the figure below, s_t is the image label, and z is the topic label of a visual word w in the input image. (a) A baseline spatiotemporal topic model using standard computer vision features as input. (b) The proposed spatio-temporal topic model using convolutional autoencoder-based features.

The following visualizations correspond Figures 3, 4, and 5 from the paper. Data were collected in dives by the SeaBed AUV at Hannibal seamount in Panama in 2015.

Move the time slider to explore images from the dataset, and observe the corresponding topic distribution or perplexity in the plots.

[Figure 3](http://oort.whoi.edu/~genevieve/panama_03/compare_models/" target="_blank) Results for unsupervised topic models versus hand-annotated terrain labels (b) for the Mission I dataset. Example images from the dataset are shown in (a). To generate plots (c,d), visual words are extracted from an image at time t and assigned a topic label z_i as described in the text. The proportion of words in the image at time t assigned to each topic label is shown on the y-axis, where different topics are represented by colors. Colors are unrelated across plots.

The hybrid HDP-CAE model (c), using more abstract features, is able to define topics that correspond more directly to useful visual phenomena than the HDP model using standard image features (d). The learned feature representation is visualized as described in the text in (e).

[Figure 4](http://oort.whoi.edu/~genevieve/panama_19/compare_models/" target="_blank) Results for two unsupervised topic models versus annotated labels in (b) for the Mission II dataset. Example images from the dataset are shown in (a). The hybrid HDP-CAE model (c) again outperforms the HDP model using standard image features (d). However, the hybrid model fails recognize some of the more transient topics, such as the crustacean swarm at (7). The learned feature representation is visualized as described in the text in (e).

[Figure 5](http://oort.whoi.edu/~genevieve/panama_19/perplexity/" target="_blank) Correlation of perplexity score of the models (c,d,e) with annotated biological anomalies (b) for the Mission II dataset. Example images of biological anomalies are shown in (a). Each image in the dataset was labeled with high, medium, or low perplexity (b). Although all three models do have differential responses in areas of high perplexity, the HDP model using standard features (d) outperforms the hybrid HDP-CAE model (c) and raw reconstruction error from the CAE (e).

Authors: Genevieve Flaspohler, Nicholas Roy, Yogesh Girdhar